The following is an edited and annotated excerpt from my Shingo Award-winning book Lean Hospitals: Improving Quality, Patient Safety, and Employee Engagement.

To me, “mistakes” and “errors” are synonyms, so a discussion of “mistake-proofing” is the same as “error-proofing.”

A Serious Problem With Large, Unknowable Numbers

It is hard to know exactly how many patients are harmed by preventable errors or how many die as a result. Different studies use various methods to estimate the scale of the problem in different countries. A landmark 1999 Institute of Medicine (IOM) study said medical mistakes cause as many as 98,000 deaths a year in the United States.[i] In 2014, Ashish Jha, MD, professor of health policy and management at Harvard School of Public Health, testified to a U.S. Senate subcommittee, saying,

“The IOM probably got it wrong. It was clearly an underestimate of the toll of human suffering that goes on from preventable medical errors.”[ii]

In 2013, the Journal of Patient Safety published that an estimated between 200,000 and 400,000 deaths per year occur due to preventable harm, which would make medical error the third leading cause of death in the United States.[iii],[iv] Another study estimated that 18% of patients were harmed, and more than 60% of those injuries were considered preventable. Data from a 2011 study in the journal Health Affairs showed that medical errors and adverse events occur in one of three admissions, and errors may occur 10 times more frequently than the IOM study indicated.[v]’

Medical harm is not a uniquely American problem, as per capita death rates are very similar in Canada and in other developed countries.[vi] Regardless of the exact numbers or the methodologies used to reach them, it is easy to agree that too many patients are harmed and that much of that harm is preventable through Lean methods and mindsets.

Moving Beyond Blaming Individuals

The late management professor Peter Scholtes said,

“Your purpose is to identify where in the process things go wrong, not who messed up. Look for systemic causes, not culprits.”[vii]

In recent years, several high-profile medical errors have made the news, in addition to the untold suffering that occurs daily. Josie King, an 18-month-old girl, died at Johns Hopkins after being given an additional dose of morphine by a visiting nurse after a physician had already changed her orders. The error worsened her dehydration as she should have been on the path to recovery.[viii] Josie’s tragedy has inspired her mother, Sorrel,[ix] and clinical leaders such as Dr. Peter Pronovost to tirelessly educate healthcare professionals and patients about the need to improve processes, systems, and communication in healthcare.[x]

My podcast with Sorrel King:

The twin babies of the actor Dennis Quaid were harmed after multiple nurses mistakenly gave them adult doses of the blood thinner heparin while in the care of the neonatal intensive care unit (NICU) at Cedars-Sinai Hospital in California. This was the result of a number of process errors that should not have occurred.[xi] Nurses did not think to check for an adult dose, as the pharmacy should have never delivered an adult dose to a neonatal unit. Even if nurses had been on guard for that mixup, the packaging of heparin and Hep-Lock were too similar, two shades of light blue that could be hard to distinguish in the proverbial dark room at 2 a.m. Sadly, Cedars-Sinai could not prevent this medical harm after the same set of circumstances had led to the deaths of three babies at an Indianapolis, Indiana, hospital.[xii]

After the incident, Dr. Michael L. Langberg, the chief medical officer for Cedars-Sinai, said,

“This was a preventable error, involving a failure to follow our standard policies and procedures, and there is no excuse for that to occur at Cedars-Sinai.”[xiii]

Situations like this illustrate the need for leaders to oversee and manage standardized work, as discussed in Chapter 5). Failures to follow standardized work must be detected proactively rather than reacting after harm has occurred. Leaders must work with staff to make sure it is possible to follow policies and procedures, improve training, and reduce waste to make sure enough time is available to do work the correct way.

Creating Quality at the Source through Error Proofing

Ensuring quality at the source (the Japanese word jidoka), through detecting and preventing errors, is one of the pillars of the Toyota Production System.[xiv] The history of jidoka dates to the time before the company even built cars. Sakichi Toyoda invented a weaving loom that automatically stopped when the thread broke, saving time, increasing productivity, and reducing the waste of defective fabric.[xv] The automatic weaving loom and that level of built-in quality has served as an inspiration throughout Toyota and among adopters of the Lean approach.

Thinking back to the Quaid case, even if we have not had a death at our own hospital from a mix-up of heparin doses, the opportunity for error and unsafe conditions might be present. Preventing that same error from occurring in our own hospital is good for the patients (protecting their safety), good for the employees (preventing the risk that they will participate in a systemic error), and good for the hospital (avoiding lawsuits and financial loss, while protecting our reputation as a high-quality facility).

Being Careful Is Not Enough

When managers blame individuals for errors, there is an underlying—but unrealistic—assumption that errors could be prevented if people would just be more careful. Errors are often viewed as the result of careless or inattentive employees. Warning, caution, or “be careful” signs throughout our hospitals are evidence of this mindset. If signs or memos were enough, we would have already solved our quality and safety problems in hospitals and the world around us.

A good exercise is to go on a “gemba walk” through a department, looking for signs that remind or tell employees to be careful. Each sign is an indicator of a process problem and evidence that the root cause of the problem has not been properly addressed through error-proofing. A good metric for the “Leanness” of a hospital might be the number of warning signs—fewer warning signs indicate that problems have been solved and prevented, eliminating the need for less effective signs and warnings.

For example, we might see a sign in the pharmacy that reads: “Please remember fridge items for cart delivery.” This suggests that this process error was made at least once. We need to ask why it was possible to forget the refrigerated items. Does the department not have a standard checklist that is used before going out to make a delivery? Is the refrigerator in an inconvenient location, making it easy to forget about when employees are in a rush?

One hospital laboratory had two analyzers that performed similar testing. For one analyzer, five specimen tubes would get loaded into a plastic rack, which was then inserted into the analyzer. The newer analyzer had a warning from the manufacturer printed on the loading chute: “CAUTION: When setting the stat sample rack, confirm the direction of the rack.” When asked how often a rack was inserted incorrectly, facing the wrong way, the lab supervisor said it happened quite frequently, causing the rack to jam and not load properly, delaying test results and taking up time. The older analyzer, used as a backup, was physically error-proofed, making it impossible to load the rack in a backward orientation; it was a far more effective approach than the warning sign on the other.

Nurses’ stations might be full of signs, such as “Avoid overtightening syringes in microbore tubing.” In this case, a new type of tubing was being purchased by the hospital, tubing for which the connector had “wings” that made it too easy for nurses to get too much leverage into the twisting motion. Instead of asking nurses to be careful, a root cause might point more to the tubing design or the hospital’s purchasing decision. Too many signs can, in and of themselves, present a safety hazard. If each bears a truly important admonition, the worker can develop what pilots call “alarm fatigue,” with too much confusing dialogue going on around them.

Of course, we would not want just to take down signs without first preventing the problem. Signs may be, at best, a short-term response (or a symptom of a problem) that can eventually be replaced with a root cause fix.

Types of Error Proofing

Error proofing can be defined as the creation of devices or methods that either prevent defects or inexpensively and automatically inspect the outcomes of a process each and every time to determine if quality is acceptable or defective. Error proofing is not a specific technology. Rather, it is a mindset and an approach that requires creativity among those who design equipment, design processes, or manage processes.

Make It Impossible to Create the Error

Ideally, error-proofing should be 100% effective in physically preventing an error from occurring. In everyday life, think about gasoline pump nozzles. Diesel nozzles are larger and do not fit into an unleaded gas vehicle. It is impossible to make that error. The error proofing is more effective than if we only relied on signs or warning labels that said: “Be careful; do not use diesel fuel in this vehicle.” This error-proofing method does not, however, prevent the opposite error of using unleaded fuel in a diesel vehicle (an error, while harmful to a diesel engine, is less harmful than putting diesel into a regular car).

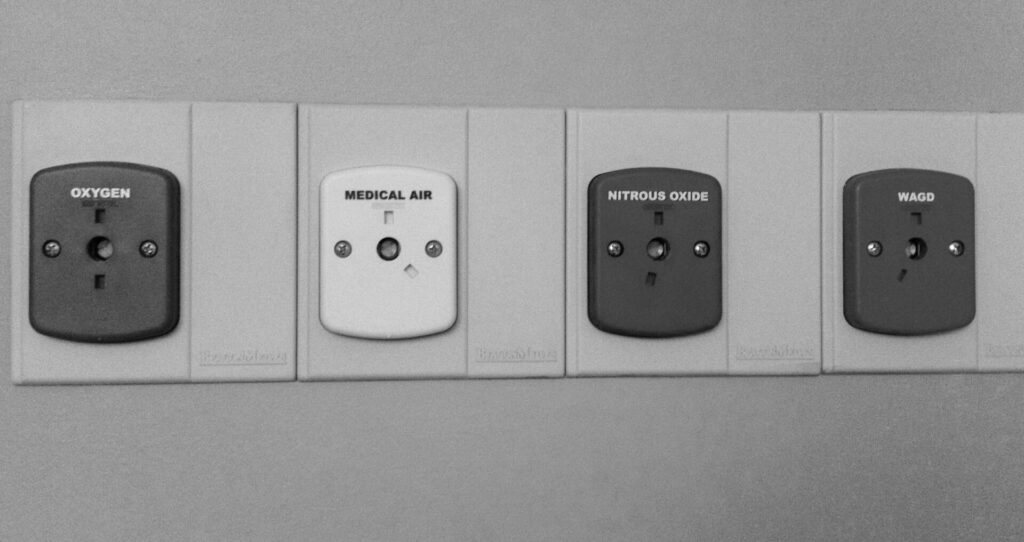

In a hospital, we can find examples of 100% error-proofing. One possible hospital error, similar to the gas pumps, is connecting a gas line to the wrong connector on the wall. Many regulators and gas lines have pins and indexing that prevent a user from connecting to the wrong line, medical air instead of oxygen, as shown in Figure 8.2. The different pins are more effective than color coding alone would have been. The connectors will not fit, and there is no way to circumvent the system to make them fit.[xvi]

One type of preventable error is the injection of the wrong solution into the wrong IV line, an error that has been reported at least 1,200 times over a five-year period, meaning it has likely occurred far more than that, given the common under-reporting of errors.[xvii] Instead of telling clinicians just to be careful, some hospitals have switched to IV tubing that physically cannot be connected to feeding syringes—a strong form of error proofing, similar to the gas line error proofing. Again, a physical device that prevents an incorrect connection is more effective than simply color-coding the lines, as some hospitals have done.

Make It Harder to Create the Error

It is not always possible to fully error-proof a process, so we can also aim to make it harder for errors to occur. Think about the software we use every day to create word-processing documents (or e-mail). One possible error is that the user accidentally clicks on the “close” button, which would lose the work. Most software requires a confirmation step, a box that pops up and asks, “Are you sure?” It is still possible for a user to accidentally click “yes,” but the error is less likely to occur. A better, more complete error proofing is software that continuously saves your work as a draft, preventing or severely minimizing data loss. Another type of error is forgetting to attach a file before hitting the send button. There are software options that look for the word “attached” or “attachment,” creating a warning message for the user if one tries to hit send without a file being attached.

In hospitals, a certain type of infusion pump has a known issue that makes it too easy for a data entry error to occur—it even has its own name, the double-key bounce. The keypad often mistakenly registers a double-digit, so if a nurse enters 36, it might be taken as 366 and could lead to an overdose.[xviii] Some hospitals have posted signs asking employees to be careful. A better approach might be a change to the software that asks “Are you sure?” and requires an active response when double numbers are found or when a dose above a certain value is inadvertently entered. This approach would still not be as effective as a redesign or an approach that absolutely prevents that error from occurring. Such a change also would not prevent the mistaken entry of 90 instead of 9.0, highlighting the need for multiple countermeasures to address complex problems.

Hospitals have already made some efforts to error-proof medication administration, as medication errors are a common cause of harm to patients; yet some experts estimate there is still, on average, one medication error per day for each hospital patient.[xix] Automated storage cabinets are one error-proofing method that many hospitals have implemented to help ensure that nurses take the correct medications for their patients. With these cabinets, nurses must scan a bar code or enter a code to indicate the patient for whom they are taking medications. The computer-controlled cabinet opens just the drawer (and sometimes just the individual bin) that contains the correct medication. This makes it harder to take the wrong drug, but there are still errors that can occur:

- The nurse can reach into the wrong bin in the correct drawer (with some systems).

- The pharmacy tech might have loaded the wrong medication into the bin.

- The wrong medication might be in the correct package that the pharmacy tech loaded into the correct bin.

- The correct medication might still be given to the wrong patient after being taken from the cabinet.

When error-proofing, we have to take care that we do not create complacency and overreliance on a particular device. Properly error-proofing the entire process requires wider analysis and error-proofing methods at each step along the way.

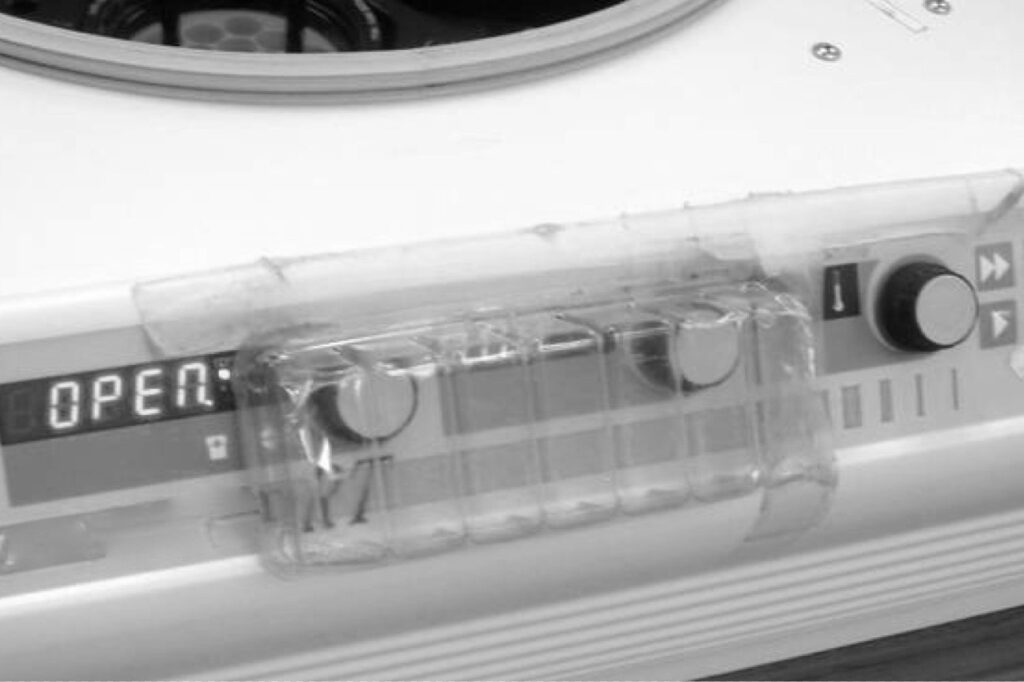

Often, the workplace can be retrofitted with simple, inexpensive error-proofing devices if we have the mindset of prevention. One laboratory implemented two simple error-proofing devices in a short time. In one area, a centrifuge had knobs that controlled timing and speed, knobs that were too easily bumped by a person walking by the machine. Instead of hanging a “be careful” sign, a technologist took a piece of clear plastic packaging material that would have otherwise been thrown away and put it over the knobs, as shown in Figure 8.3. The prevention method was effective, cost nothing, and was probably faster than making a sign. The mindset was in place to figure out how that error could be prevented.

In the microbiology area, visitors dropped off specimens on a counter and were often tempted to reach across the counter rather than walking to the end, where the official drop-off spot was located. The problem with reaching across the counter is that a person, unfamiliar with the area, could have easily burned himself or herself on a small incinerator that sat on the counter, without an indication that it was hot. Instead of posting warning signs, a manager had maintenance install a flexiglass shield that prevented people from reaching across the bench, as shown in Figure 8.4. It was a small investment but helped prevent injury better than a sign.

Make It Obvious the Error Has Occurred

Another error-proofing approach is to make it obvious when errors have occurred through automated checks or simple inspection steps. The early Toyota weaving loom did not prevent the thread from breaking, but quickly detected the problem, stopping the machine and preventing the bad fabric from being produced.

One possible error in instrument sterilization is that equipment malfunctions or misuse results in improper sterilization of an instrument pack. There might be multiple methods for error-proofing the equipment or its use, but common practice also uses special tape wraps or inserts that change colors or form black stripes to indicate proper sterilization. The absence of these visual indicators makes it obvious an error has occurred, helping protect the patient.

In a hospital setting, there is a risk that intubation tubes are inserted into the esophagus instead of the trachea, which would cause harm by preventing air from getting to the patient’s lungs. A 1998 study indicated this happens in 5.4% of intubations.[xx] Another study suggested that “unrecognized esophageal intubations” occurred in 2% of cases, meaning the error was not detected or reversed quickly. Warning signs posted on the device or in the emergency department would not be an effective error-proofing strategy. If we cannot engineer the device to ensure the tube only goes into the airway, we can perform a simple test after each insertion. Plastic aspiration bulbs can be provided so the caregiver can see if air from the patient’s lungs will reinflate a squeezed bulb within five seconds.[xxi] If not, we can suspect that the tube is in the esophagus, looking to correct the error before the patient is harmed. This form of error-proofing is not 100% reliable because it relies on people to adhere to the standardized work that they should check for proper placement.

Other error-proofing might include monitors and sensors that can automatically detect and signal the anesthesiologist that the patient was intubated incorrectly, although there are limitations to the effectiveness of monitoring oxygen saturation levels as an inspection step for this error. A chest X-ray might also be used as an inspection method, but studies showed that it is an ineffective method.[xxii] “Commonly used” clinical inspection methods, in this case, are often inaccurate, so medical evidence should drive the standardized work for how clinicians check for intubation errors.

Make the System Robust So It Tolerates the Error

At gas stations, there is a risk that a customer could drive away without detaching the pump nozzle from the car. They have been able to error-proof this not by physically preventing the error or by hanging signs; instead, gas stations have anticipated that the error could occur and designed the system to be robust and allow for this. If a driver does drive off, the pump has a quick-release valve that snaps away and cuts off the flow of gasoline, preventing a spill or possible explosion (although you might look silly driving down the road with the nozzle and hose dragging from your vehicle).

In a laboratory, one hospital found a test instrument that was not designed to these error-proofing standards as the instrument was not robust against spills of patient specimens. The lab had responded by posting two separate signs on the instrument that told employees: “Do Not Spill; Wipe Spills Immediately.” The signs did nothing to prevent the error as employees generally tried not spilling patient specimens. The root cause of the situation was that a circuit board in the instrument was exposed underneath the place where specimens were loaded. A different hospital, using that same equipment, posted a memo notifying staff that they had “blown three electronic boards in just a few weeks,” which cost $1,000 each. The memo emphasized that people should not pour off specimens or handle liquids above the equipment as part of their standardized work. As a better root cause preventive measure, the designer of the instrument should have anticipated that patient specimen spills were likely to occur at some point in the instrument’s use and taken steps to protect the sensitive board.

One lesson for hospitals is to consider design and error proofing, even going through an FMEA exercise, when buying new equipment. Hospitals can pressure manufacturers and suppliers to build error-proofing devices into equipment, using their market power to reward suppliers who make equipment that is more robust against foreseeable errors.

Conclusion

In conclusion, the excerpt from Lean Hospitals: Improving Quality, Patient Safety, and Employee Engagement highlights a crucial aspect of healthcare: the prevalence of medical errors and the need for systemic improvements to prevent them. This issue ties directly into the themes discussed in The Mistakes That Make Us: Cultivating a Culture of Learning and Innovation. Both books emphasize the importance of understanding and rectifying mistakes, not to assign blame but to foster a culture of continuous learning and improvement.

The examples in Lean Hospitals of medical errors, such as the incidents involving Josie King and the Quaid twins, underscore the catastrophic consequences that can arise from process failures. These cases illustrate the need to move beyond a blame-centric view and focus on systemic changes. By adopting a Lean approach, which emphasizes error-proofing and quality at the source, healthcare institutions can significantly reduce the likelihood of such errors.

This approach aligns with the principles in The Mistakes That Make Us, which advocate for a culture that views mistakes as opportunities for learning and innovation. The book argues that by recognizing and understanding our errors, we can develop systems and processes that are more resilient and effective. In healthcare, this means creating an environment where mistakes are not just corrected but are used as catalysts for systemic improvements, leading to safer, more efficient, and patient-centric care.

Thus, the key takeaway from both books is the transformative power of understanding and leveraging mistakes. In Lean Hospitals, this is applied to healthcare processes, emphasizing practical steps for error-proofing and quality improvement. In The Mistakes That Make Us, the focus is broader, advocating for a cultural shift that embraces mistakes as essential for learning and innovation in any field. Together, these perspectives provide a comprehensive roadmap for organizations seeking to excel in today’s complex and ever-changing environment.

[i] Grady, Denise, “Study Finds No Progress in Safety at Hospitals,” New York Times, November 24, 2010, http://www.nytimes.com/2010/11/25/health/research/25patient.html (accessed October 16, 2015).

[ii] McCann, Erin, “Deaths by medical mistakes hit records,”

http://www.healthcareitnews.com/news/deaths-by-medical-mistakes-hit-records (accessed October 16, 2015).

[iii] James, John T., PhD, “A New, Evidence-based Estimate of Patient Harms Associated with Hospital Care,” Journal of Patient Safety (September 2013 – Volume 9 – Issue 3 – p 122–128).

[iv] Allen, Marshall, “How Many Die From Medical Mistakes in U.S. Hospitals?”

http://www.propublica.org/article/how-many-die-from-medical-mistakes-in-us-hospitals (accessed October 16, 2015).

[v] Classen, David C., Roger Resar, et al., “‘Global Trigger Tool’ Shows that Adverse Events in Hospitals May Be Ten Times Greater than Previously Measured,” Health Affairs, April 2011, 30, no. 4: 581–89.

[vi] Graban, Mark, “Statistics on Healthcare Quality and Patient Safety Problems – Errors & Harm,” http://www.leanblog.org/2009/08/statistics-on-healthcare-quality-and/ (accessed October 16, 2015).

[vii] Scholtes, Peter, The Leader’s Handbook (New York: McGraw-Hill Education, 1997), 92.

[viii] Josie King Foundation, “What Happened,” http://www.josieking.org/page.cfm?pageID=10 (accessed March 26, 2015).

[ix] Graban, Mark “LeanBlog Podcast #78 – Sorrel King, Improving Patient Safety,” http://www.leanblog.org/78 (accessed October 16, 2015).

[x] Pronovost, Peter J., Safe Patients, Smart Hospitals: How One Doctor’s Checklist Can Help Us Change Health Care from the Inside Out (New York: Hudson Street Press, 2010), 1.

[xi] Kroft, Steve, “Dennis Quaid Recounts Twins’ Drug Ordeal,” 60 Minutes, March 16, 2008, http://www.cbsnews.com/news/dennis-quaid-recounts-twins-drug-ordeal/ (accessed April 6, 2015).

[xii] Davies, Tom, “Fatal Drug Mix-Up Exposes Hospital Flaws,” Washington Post, September 22, 2006, http://www.washingtonpost.com/wp-dyn/content/article/2006/09/22/AR2006092200815.html? nav=hcmodule (accessed March 26, 2015).

[xiii] Ornstein, Charles and Anna Gorman, “Possible medical mix-up for twins,” http://articles.latimes.com/2007/nov/21/local/me-twins21 (accessed October 16, 2015).

[xiv] Toyota Motor Corporation, “Toyota Production System,” http://www.toyota-global.com/company/vision_philosophy/toyota_production_system/ (accessed March 26, 2015).

[xv] Toyota Motor Corporation, “Toyota Production System,” http://www.toyota-global.com/company/vision_philosophy/toyota_production_system/ (accessed March 26, 2015).

[xvi] Grout, John, Mistake-Proofing the Design of Healthcare Care Processes, AHRQ Publication 07-0020 (Rockville, MD: Agency for Healthcare Research and Quality, 2007), 41, 76.

[xvii] Wahlberg, David, “Day 1: Medical Tubing Mistakes Can Be Deadly,” Wisconsin State Journal, http://host.madison.com/news/local/health_med_fit/day-medical-tubing-mistakes-can-be-deadly/article_bc888491-1f7d-5008-be21-31009a4b253a.html (accessed April 6, 2015).

[xviii] “Double Key Bounce and Double Keying Errors,” Institute for Safe Medication Practices, January 12, 2006, http://www.ismp.org/Newsletters/acutecare/articles/20060112.asp?ptr=y (accessed March 26, 2015).

[xix] Anderson, Pamela and Terri Townsend, ” Medication errors: Don’t let them happen to you,” http://www.americannursetoday.com/assets/0/434/436/440/6276/6334/6350/6356/8b8dac76-6061-4521-8b43-d0928ef8de07.pdf (accessed October 16, 2015).

[xx] Sakles, J. C., Laurin, E. G., Rantapaa, A. A., and Panacek, E., “Airway Management in the Emergency Department: A One-Year Study of 610 Tracheal Intubations,” Annals of Emergency Medicine, 1998, 31:325–32.

[xxi] Grout, Mistake-Proofing, 78.

[xxii] Dittrich, Kenneth C., MD, “Delayed Recognition of Esophageal Intubation,” CJEM Canadian Journal of Emergency Medical Care, 2002, 4, no. 1: 41–44. http://www.cjem-online.ca/v4/n1/p41 (accessed April 9, 2011).